Salesforce AI Research Introduces LaTRO: A Self-Rewarding Framework for Enhancing Reasoning Capabilities in Large Language Models

Large language models (LLMs), useful for answering questions and generating content, are now being trained to handle tasks requiring advanced reasoning, such as complex problem-solving in mathematics, science, and logical deduction. Improving reasoning capabilities within LLMs is a core focus of AI research, aiming to empower models to conduct sequential thinking processes. This area’s enhancement could enable more robust applications in diverse fields by allowing models to navigate through complex reasoning tasks independently.

A persistent challenge in LLM development is optimizing their reasoning abilities without external feedback. Current LLMs perform well on relatively simple tasks but need help with multi-step or sequential reasoning, where an answer is derived through a series of connected logical steps. This limitation restricts LLMs’ utility in tasks that require a logical progression of ideas, such as solving intricate mathematical problems or analyzing data in a structured way. Consequently, building self-sufficient reasoning capabilities into LLMs has become essential to expand their functionality and effectiveness in tasks where reasoning is key.

Researchers have experimented with several inference-time methods to address these challenges to improve reasoning. One prominent approach is Chain-of-Thought (CoT) prompting, which encourages the model to break down a complex problem into manageable parts, making each decision step-by-step. This method enables models to follow a structured approach toward problem-solving, making them better suited for tasks requiring logic and precision. Other approaches, like Tree-of-Thought and Program-of-Thought, allow LLMs to explore multiple reasoning paths, providing diverse approaches to problem-solving. While effective, these methods focus primarily on runtime improvements and do not fundamentally enhance reasoning ability during the model’s training phase.

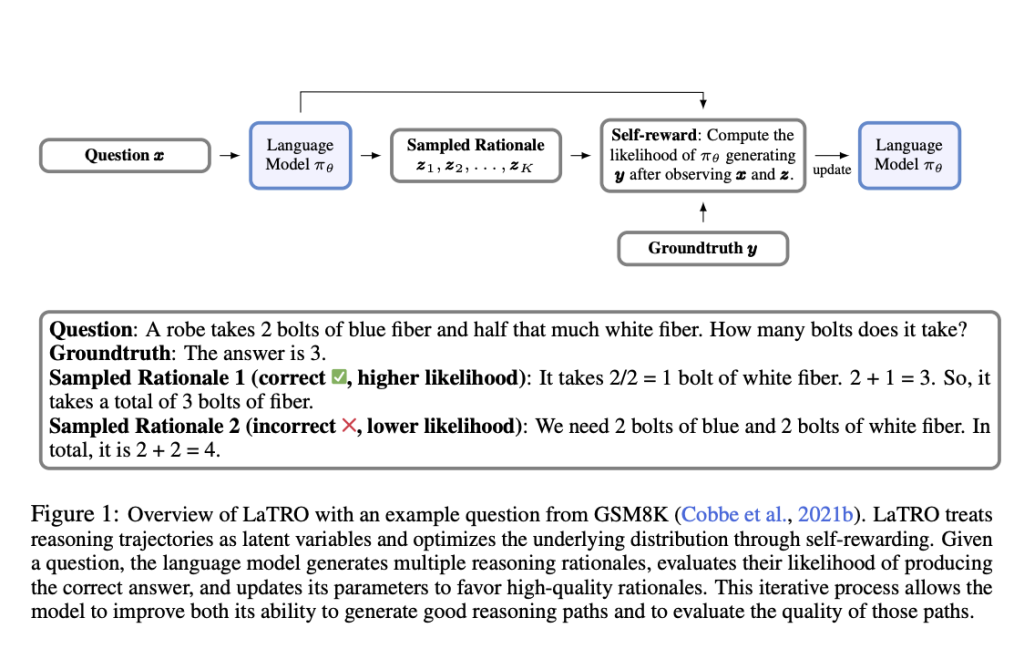

Researchers from Salesforce AI Research have introduced a new framework called LaTent Reasoning Optimization (LaTRO). LaTRO is an innovative approach that transforms the reasoning process into a latent sampling problem, offering an intrinsic enhancement to the model’s reasoning capabilities. This framework allows LLMs to refine their reasoning pathways through a self-rewarding mechanism, which enables them to evaluate and improve their responses without relying on external rewards or supervised feedback. By focusing on a self-improvement strategy, LaTRO advances reasoning performance at the training level, creating a foundational change in how models understand and tackle complex tasks.

LaTRO’s methodology is grounded in sampling reasoning paths from a latent distribution and optimizing these paths through variational techniques. LaTRO utilizes a unique self-rewarding mechanism at its core by sampling multiple reasoning paths for a given question. Each path is evaluated based on its likelihood of producing a correct answer, with the model then adjusting its parameters to prioritize paths with higher success rates. This iterative process enables the model to concurrently enhance its ability to generate quality reasoning paths and assess the effectiveness of these paths, thus fostering a continual self-improvement cycle. Unlike conventional approaches, LaTRO does not depend on external reward models, making it a more autonomous and adaptable framework for enhancing reasoning in LLMs. Furthermore, by shifting the reasoning optimization to the training phase, LaTRO effectively reduces computational demands during inference, making it a resource-efficient solution.

The performance of LaTRO has been rigorously tested across various datasets, with results underscoring its effectiveness. For instance, in tests on the GSM8K dataset, which includes math-based reasoning challenges, LaTRO demonstrated a substantial 12.5% improvement over base models in zero-shot accuracy. This gain indicates a marked enhancement in the model’s reasoning ability without requiring task-specific training. Furthermore, LaTRO outperformed supervised fine-tuning models by 9.6%, showcasing its ability to deliver more accurate results while maintaining efficiency. On the ARC-Challenge dataset, which focuses on logical reasoning, LaTRO again surpassed both base and fine-tuned models, significantly increasing performance. For Mistral-7B, one of the LLM architectures used, the zero-shot accuracy on GSM8K improved from 47.8% in base models to 67.3% under LaTRO with greedy decoding. In self-consistency testing, where multiple reasoning paths are considered, LaTRO achieved an additional performance boost, with a remarkable 90.5% accuracy for Phi-3.5 models on GSM8K.

In addition to quantitative results, LaTRO’s self-rewarding mechanism is evident in its qualitative improvements. The method effectively teaches LLMs to evaluate reasoning paths internally, producing concise and logically coherent answers. The experimental analysis reveals that LaTRO enables LLMs to better utilize their latent reasoning potential, even in complex scenarios, thus reducing reliance on external evaluation frameworks. This advancement has implications for many applications, especially in fields where logical coherence and structured reasoning are essential.

In conclusion, LaTRO offers an innovative and effective solution to enhance LLM reasoning through self-rewarding optimization, setting a new standard for model self-improvement. This framework enables pre-trained LLMs to unlock their latent potential in reasoning tasks by focusing on training-time reasoning enhancement. This advancement by Salesforce AI Research highlights the potential for autonomous reasoning in AI models and demonstrates that LLMs can self-evolve into more effective problem-solvers. LaTRO represents a significant leap forward, bringing AI closer to achieving autonomous reasoning abilities across various domains.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[FREE AI WEBINAR] Implementing Intelligent Document Processing with GenAI in Financial Services and Real Estate Transactions

Nikhil is an intern consultant at Marktechpost. He is pursuing an integrated dual degree in Materials at the Indian Institute of Technology, Kharagpur. Nikhil is an AI/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in Material Science, he is exploring new advancements and creating opportunities to contribute.