OpenAI used a game to help AI models explain themselves better

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

One of the most interesting and useful slang terms to emerge from Reddit in my opinion is ELI5, from its subreddit of the same name, which stands for “Explain It Like I’m 5” years old. The idea is that by asking an expert for an explanation simple enough for a five-year-old child to understand, a human expert can convey complex ideas, theories, and concepts in a way that is easier for everyone, even uneducated laypeople, to understand.

As it turns out, the concept may be helpful for AI models too, especially when peering into the “black box” of how they arrive at answers, also known as the “legibility” problem.

Today, OpenAI researchers are releasing a new scientific paper on the company’s website and on arXiv.org (embedded below) revealing a new algorithm they’ve developed by which large language models (LLMs) such as OpenAI’s GPT-4 (which powers some versions of ChatGPT) can learn to better explain themselves to their users. The paper is titled “Prover-Verifier Games Improve Legibility of LLM Outputs.”

This is critical for establishing trustworthiness in AI systems especially as they become more powerful and integrated into fields where incorrectness is dangerous or a matter of life-or-death, such as healthcare, law, energy, military and defense applications, and other critical infrastructure.

Even for other businesses not dealing regularly with sensitive or dangerous materials, the lack of trustworthiness around AI models’ answers and their propensity to hallucinate incorrect answers may stop them from embracing models that could otherwise benefit and level-up their operations. OpenAI’s work seeks to give people a framework to train models to better explain how they arrived at particular answers so that they can be better trusted.

“This is fresh research that we just wrapped up,” said OpenAI researcher Jan Hendrik Kirchner, a co-author of the paper, in a teleconference interview with VentureBeat yesterday. “We’re very excited about where to take it from here, but it’s important for us to share these insights with the community as fast as possible, so that people learn about the legibility problem and can contribute to the solution.”

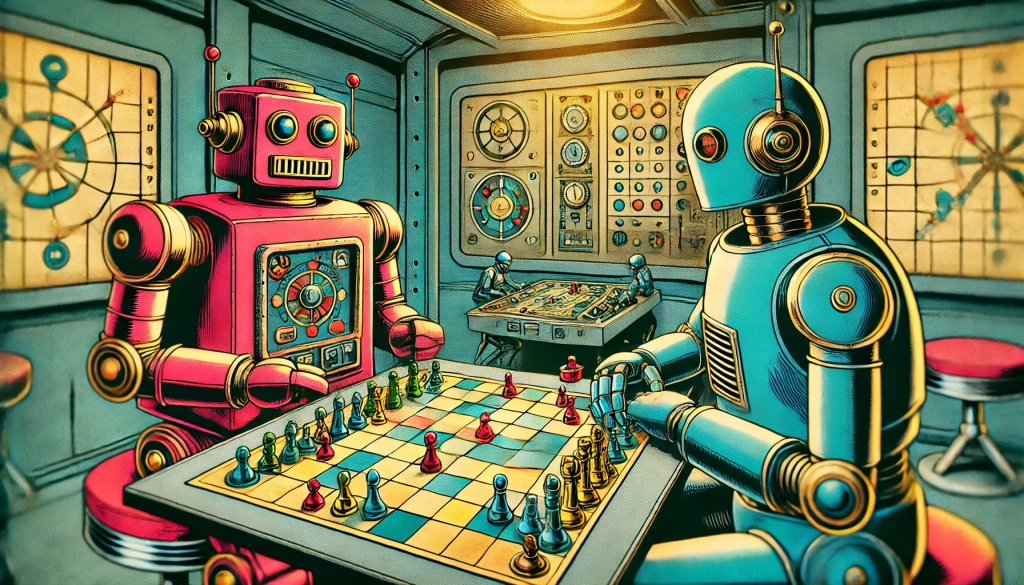

The Prover-Verifier Game and how it works

The new algorithm from the OpenAI researchers is based on the “Prover-Verifier Game” first conceived and articulated in another paper by machine learning researchers at the University of Toronto and Vector Institute for Artificial Intelligence published in 2021.

The game pairs two AI models together — a more powerful and intelligent “prover” and a less powerful “verifier” and asks them to essentially outwit one another.

The prover’s goal is to always get the verifier to believe in a certain answer regardless of whether or not it is the correct one, while the verifier’s goal is to always select the correct answer no matter what the prover may say or try to persuade otherwise.

The goal is to get AI models to “show their work” more when providing answers to human users, or as the University of Toronto researchers put it in their paper, “encourage neural networks to solve decision problems in a verifiable manner.”

“As our large language models are increasingly used in many critical and complex domains, it is very important for the solutions to be trustworthy, and so the Prover-Verifier game method is one way to make the output more clear and verifiable, and that’s one component of trustworthiness,” said OpenAI researcher and paper co-author Yining Chen.

How OpenAI implemented the Prover-Verifier Game to make AI better at explaining itself

In the case of OpenAI, the researchers used two custom fine-tuned GPT-4 family models in this way and had them engage in several rounds of the game wherein they were asked to answer grade school math word problems with known answers.

However, the OpenAI researchers altered the game subtly between rounds in an important way: the prover model was set up to be either “helpful” and trying to deliver the right answer to the verifier, or “sneaky” and convince the verifier of the prover’s view whether or not it was correct. The less intelligent verifier model didn’t know whether or not it was interacting with a helpful or sneaker prover, and as such, had to just evaluate the prover’s answers with regards only to its own training on whether the prover’s response was correct or incorrect.

Also, the OpenAI researchers retrained both models between each round of the game with data on how they performed in the previous rounds, ultimately increasing their skill at determining whether or not an answer was correct as well as explaining, in the case of the prover model, why it chose the answer it did in a human legible way.

Human contractors and evaluators were used to rate how understandable or legible the answers of the prover model were as well.

After doing this process across at least four or more rounds, the researchers found that the verifier model became better at resisting the persuasion techniques of the sneaky prover model, while the prover model also became better at explaining itself to human users.

As the paper states: “sneaky solutions that are harder to detect for smaller verifiers are also harder to detect for humans, and helpful solutions that are more legible to smaller verifiers are also more legible to humans.”

The ultimate resulting algorithm developed by the researchers from these rounds optimizes LLMs for both correctness and legibility to human evaluators (seen as the top middle line in the graph below labeled “checkability game”):

OpenAI states in its blog post that it hopes the work “will be instrumental in developing AI systems whose outputs are not only correct but also transparently verifiable, thereby enhancing trust and safety in their real-world applications.”

The method “has potential to align future models that are more intelligent than humans,” Chen added to VentureBeat.

“It might be very tricky at some point for humans to reliably evaluate whether that completion is correct or not,” when models exceed human intelligence, said Kirchner.