Magic AI Proposes HashHop: A New Alternative to Needle in a Haystack to Evaluate LLMs Ultra-Long Context Ability in a Much More Robust Way

LLMs have advanced significantly in recent years, demonstrating impressive capabilities in various tasks. However, LLMs’ performance often deteriorates when dealing with long input sequences. This limitation can hinder their applicability in domains requiring extensive information processing, such as document summarization, question answering, and machine translation.

Current models are limited by short context windows, which restrict their ability to retain and utilize large amounts of information, leading to reliance on less accurate memorization techniques. The problem is further compounded by inadequate evaluation metrics that fail to accurately measure a model’s ability to handle extensive context effectively. The existing long context evaluation methods, like the “Needle In A Haystack” test, fall short because they provide semantic hints that make it easier for models to retrieve information without genuinely handling large contexts. These methods often lead to inflated performance metrics for models with fundamentally limited capabilities, such as Recurrent Neural Networks (RNNs) and State Space Models (SSMs).

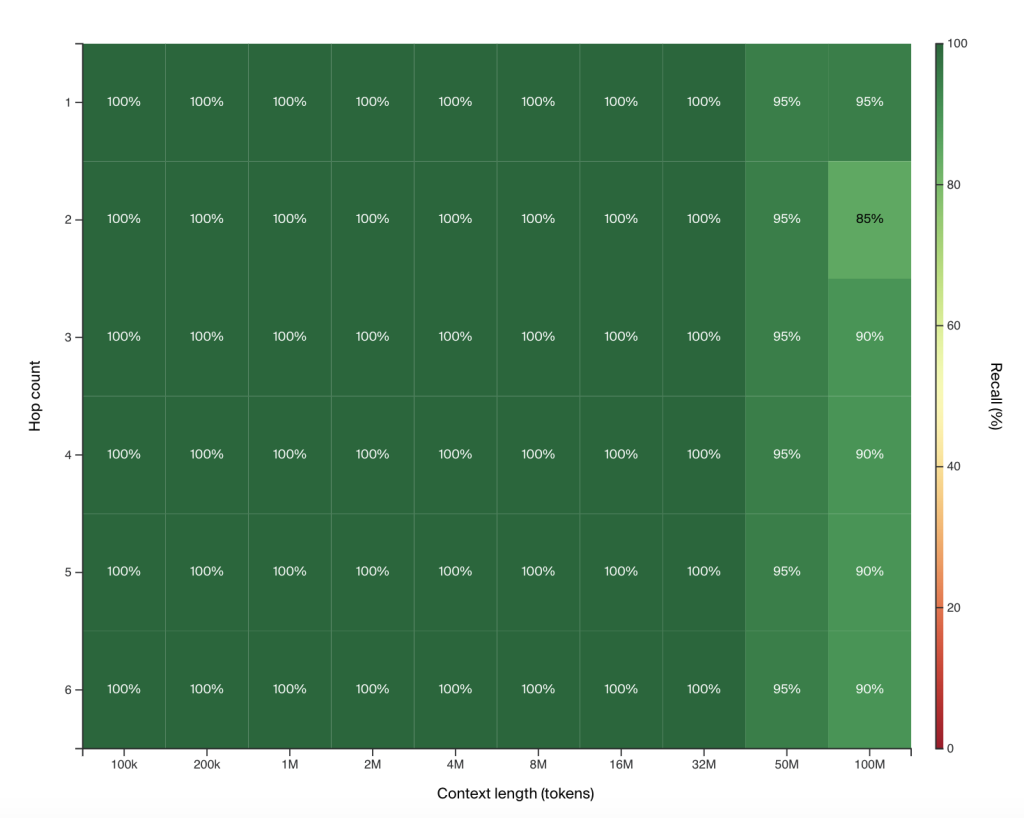

Magic AI Lab addresses the challenge of enhancing AI models’ ability to process and reason with ultra-long contexts during inference by introducing a new evaluation tool called HashHop. HashHop uses random, incompressible hash pairs, making it impossible for models to rely on shortcuts. Additionally, Magic has developed a Long-Term Memory (LTM) model capable of handling up to 100 million tokens in context, which vastly outperforms existing models in terms of memory efficiency and processing power.

The HashHop evaluation tool measures a model’s ability to recall and reason across multiple hops of hash pairs without relying on semantic hints. The model must complete a sequence of hash pairs, which can be shuffled to ensure order- and position-invariance. The LTM-2-mini model, trained using this method, shows promising results in handling up to 100 million tokens, demonstrating its ability to reason over large contexts far more efficiently than traditional models. Unlike other models like Llama 3.1 405B, which require massive computational resources, LTM-2-mini operates at a fraction of the cost, making it more practical for real-world applications. Although the model shows declining performance with more than two hops without a “chain of thought,” its ability to manage two hops effectively indicates that it can build more complex reasoning circuits than traditional single-step models.

In conclusion, the proposed model represents a significant advancement in AI’s ability to handle ultra-long contexts, particularly in software development. Magic’s LTM-2-mini model, evaluated using the newly proposed HashHop method, offers a more reliable and efficient approach to processing extensive context windows. This development resolves the limitations in current models and evaluation methods, presenting a promising solution for enhancing code synthesis and other applications requiring deep contextual understanding.

Check out the Details and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

Here is a highly recommended webinar from our sponsor: ‘Building Performant AI Applications with NVIDIA NIMs and Haystack’

Pragati Jhunjhunwala is a consulting intern at MarktechPost. She is currently pursuing her B.Tech from the Indian Institute of Technology(IIT), Kharagpur. She is a tech enthusiast and has a keen interest in the scope of software and data science applications. She is always reading about the developments in different field of AI and ML.