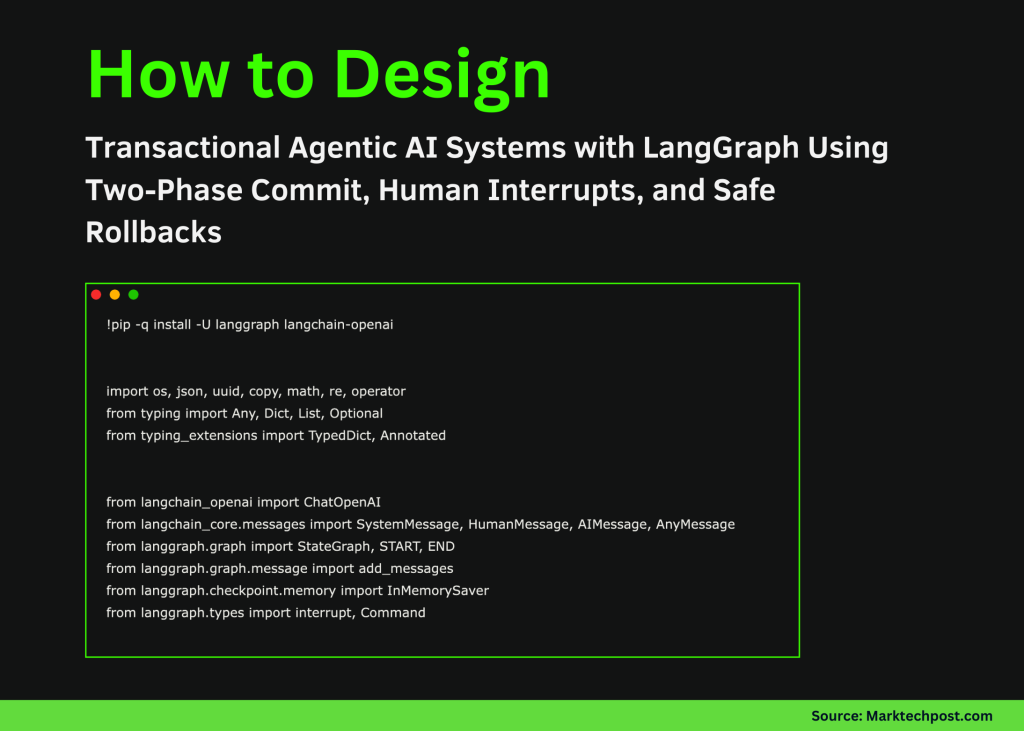

How to Design Transactional Agentic AI Systems with LangGraph Using Two-Phase Commit, Human Interrupts, and Safe Rollbacks

In this tutorial, we implement an agentic AI pattern using LangGraph that treats reasoning and action as a transactional workflow rather than a single-shot decision. We model a two-phase commit system in which an agent stages reversible changes, validates strict invariants, pauses for human approval via graph interrupts, and commits or rolls back only then. With this, we demonstrate how agentic systems can be designed with safety, auditability, and controllability at their core, moving beyond reactive chat agents toward structured, governance-aware AI workflows that run reliably in Google Colab using OpenAI models. Check out the Full Codes here.

import os, json, uuid, copy, math, re, operator

from typing import Any, Dict, List, Optional

from typing_extensions import TypedDict, Annotated

from langchain_openai import ChatOpenAI

from langchain_core.messages import SystemMessage, HumanMessage, AIMessage, AnyMessage

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messages

from langgraph.checkpoint.memory import InMemorySaver

from langgraph.types import interrupt, Command

def _set_env_openai():

if os.environ.get(“OPENAI_API_KEY”):

return

try:

from google.colab import userdata

k = userdata.get(“OPENAI_API_KEY”)

if k:

os.environ[“OPENAI_API_KEY”] = k

return

except Exception:

pass

import getpass

os.environ[“OPENAI_API_KEY”] = getpass.getpass(“Enter OPENAI_API_KEY: “)

_set_env_openai()

MODEL = os.environ.get(“OPENAI_MODEL”, “gpt-4o-mini”)

llm = ChatOpenAI(model=MODEL, temperature=0)

We set up the execution environment by installing LangGraph and initializing the OpenAI model. We securely load the API key and configure a deterministic LLM, ensuring that all downstream agent behavior remains reproducible and controlled. Check out the Full Codes here.

{“txn_id”: “T001”, “name”: “Asha”, “email”: “[email protected]”, “amount”: “1,250.50”, “date”: “12/01/2025”, “note”: “Membership renewal”},

{“txn_id”: “T002”, “name”: “Ravi”, “email”: “[email protected]”, “amount”: “-500”, “date”: “2025-12-02”, “note”: “Chargeback?”},

{“txn_id”: “T003”, “name”: “Sara”, “email”: “[email protected]”, “amount”: “700”, “date”: “02-12-2025”, “note”: “Late fee waived”},

{“txn_id”: “T003”, “name”: “Sara”, “email”: “[email protected]”, “amount”: “700”, “date”: “02-12-2025”, “note”: “Duplicate row”},

{“txn_id”: “T004”, “name”: “Lee”, “email”: “[email protected]”, “amount”: “NaN”, “date”: “2025/12/03”, “note”: “Bad amount”},

]

ALLOWED_OPS = {“replace”, “remove”, “add”}

def _parse_amount(x):

if isinstance(x, (int, float)):

return float(x)

if isinstance(x, str):

try:

return float(x.replace(“,”, “”))

except:

return None

return None

def _iso_date(d):

if not isinstance(d, str):

return None

d = d.replace(“/”, “-“)

p = d.split(“-“)

if len(p) == 3 and len(p[0]) == 4:

return d

if len(p) == 3 and len(p[2]) == 4:

return f”{p[2]}-{p[1]}-{p[0]}”

return None

def profile_ledger(rows):

seen, anomalies = {}, []

for i, r in enumerate(rows):

if _parse_amount(r.get(“amount”)) is None:

anomalies.append(i)

if r.get(“txn_id”) in seen:

anomalies.append(i)

seen[r.get(“txn_id”)] = i

return {“rows”: len(rows), “anomalies”: anomalies}

def apply_patch(rows, patch):

out = copy.deepcopy(rows)

for op in sorted([p for p in patch if p[“op”] == “remove”], key=lambda x: x[“idx”], reverse=True):

out.pop(op[“idx”])

for op in patch:

if op[“op”] in {“add”, “replace”}:

out[op[“idx”]][op[“field”]] = op[“value”]

return out

def validate(rows):

issues = []

for i, r in enumerate(rows):

if _parse_amount(r.get(“amount”)) is None:

issues.append(i)

if _iso_date(r.get(“date”)) is None:

issues.append(i)

return {“ok”: len(issues) == 0, “issues”: issues}

We define the core ledger abstraction along with the patching, normalization, and validation logic. We treat data transformations as reversible operations, allowing the agent to reason about changes safely before committing them. Check out the Full Codes here.

messages: Annotated[List[AnyMessage], add_messages]

raw_rows: List[Dict[str, Any]]

sandbox_rows: List[Dict[str, Any]]

patch: List[Dict[str, Any]]

validation: Dict[str, Any]

approved: Optional[bool]

def node_profile(state):

p = profile_ledger(state[“raw_rows”])

return {“messages”: [AIMessage(content=json.dumps(p))]}

def node_patch(state):

sys = SystemMessage(content=”Return a JSON patch list fixing amounts, dates, emails, duplicates”)

usr = HumanMessage(content=json.dumps(state[“raw_rows”]))

r = llm.invoke([sys, usr])

patch = json.loads(re.search(r”\[.*\]”, r.content, re.S).group())

return {“patch”: patch, “messages”: [AIMessage(content=json.dumps(patch))]}

def node_apply(state):

return {“sandbox_rows”: apply_patch(state[“raw_rows”], state[“patch”])}

def node_validate(state):

v = validate(state[“sandbox_rows”])

return {“validation”: v, “messages”: [AIMessage(content=json.dumps(v))]}

def node_approve(state):

decision = interrupt({“validation”: state[“validation”]})

return {“approved”: decision == “approve”}

def node_commit(state):

return {“messages”: [AIMessage(content=”COMMITTED”)]}

def node_rollback(state):

return {“messages”: [AIMessage(content=”ROLLED BACK”)]}

We model the agent’s internal state and define each node in the LangGraph workflow. We express agent behavior as discrete, inspectable steps that transform state while preserving message history. Check out the Full Codes here.

builder.add_node(“profile”, node_profile)

builder.add_node(“patch”, node_patch)

builder.add_node(“apply”, node_apply)

builder.add_node(“validate”, node_validate)

builder.add_node(“approve”, node_approve)

builder.add_node(“commit”, node_commit)

builder.add_node(“rollback”, node_rollback)

builder.add_edge(START, “profile”)

builder.add_edge(“profile”, “patch”)

builder.add_edge(“patch”, “apply”)

builder.add_edge(“apply”, “validate”)

builder.add_conditional_edges(

“validate”,

lambda s: “approve” if s[“validation”][“ok”] else “rollback”,

{“approve”: “approve”, “rollback”: “rollback”}

)

builder.add_conditional_edges(

“approve”,

lambda s: “commit” if s[“approved”] else “rollback”,

{“commit”: “commit”, “rollback”: “rollback”}

)

builder.add_edge(“commit”, END)

builder.add_edge(“rollback”, END)

app = builder.compile(checkpointer=InMemorySaver())

We construct the LangGraph state machine and explicitly encode the control flow between profiling, patching, validation, approval, and finalization. We use conditional edges to enforce governance rules rather than rely on implicit model decisions. Check out the Full Codes here.

state = {

“messages”: [],

“raw_rows”: SAMPLE_LEDGER,

“sandbox_rows”: [],

“patch”: [],

“validation”: {},

“approved”: None,

}

cfg = {“configurable”: {“thread_id”: “txn-demo”}}

out = app.invoke(state, config=cfg)

if “__interrupt__” in out:

print(json.dumps(out[“__interrupt__”], indent=2))

decision = input(“approve / reject: “).strip()

out = app.invoke(Command(resume=decision), config=cfg)

print(out[“messages”][-1].content)

run()

We run the transactional agent and handle human-in-the-loop approval through graph interrupts. We resume execution deterministically, demonstrating how agentic workflows can pause, accept external input, and safely conclude with either a commit or rollback.

In conclusion, we showed how LangGraph enables us to build agents that reason over states, enforce validation gates, and collaborate with humans at precisely defined control points. We treated the agent not as an oracle, but as a transaction coordinator that can stage, inspect, and reverse its own actions while maintaining a full audit trail. This approach highlights how agentic AI can be applied to real-world systems that require trust, compliance, and recoverability, and it provides a practical foundation for building production-grade autonomous workflows that remain safe, transparent, and human-supervised.

Check out the Full Codes here. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.