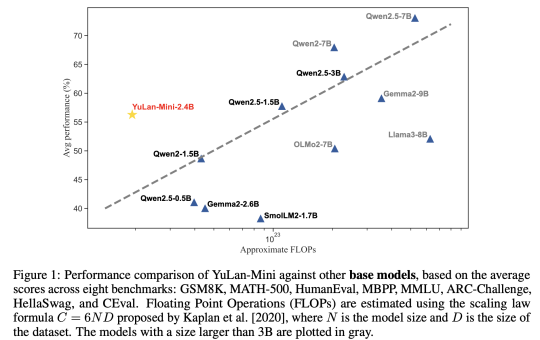

YuLan-Mini: A 2.42B Parameter Open Data-efficient Language Model with Long-Context Capabilities and Advanced Training Techniques

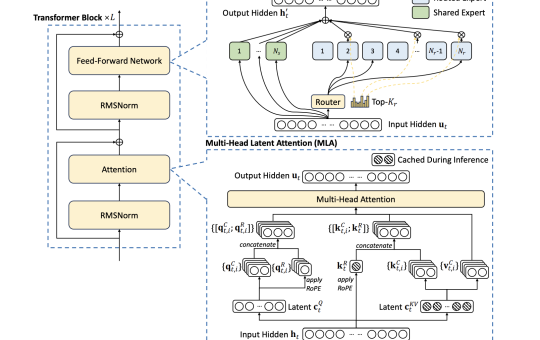

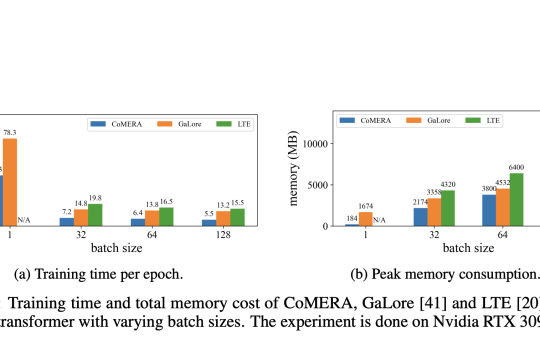

Large language models (LLMs) built using transformer architectures heavily depend on pre-training with large-scale data to predict sequential tokens. This...