Beyond Monte Carlo Tree Search: Unleashing Implicit Chess Strategies with Discrete Diffusion

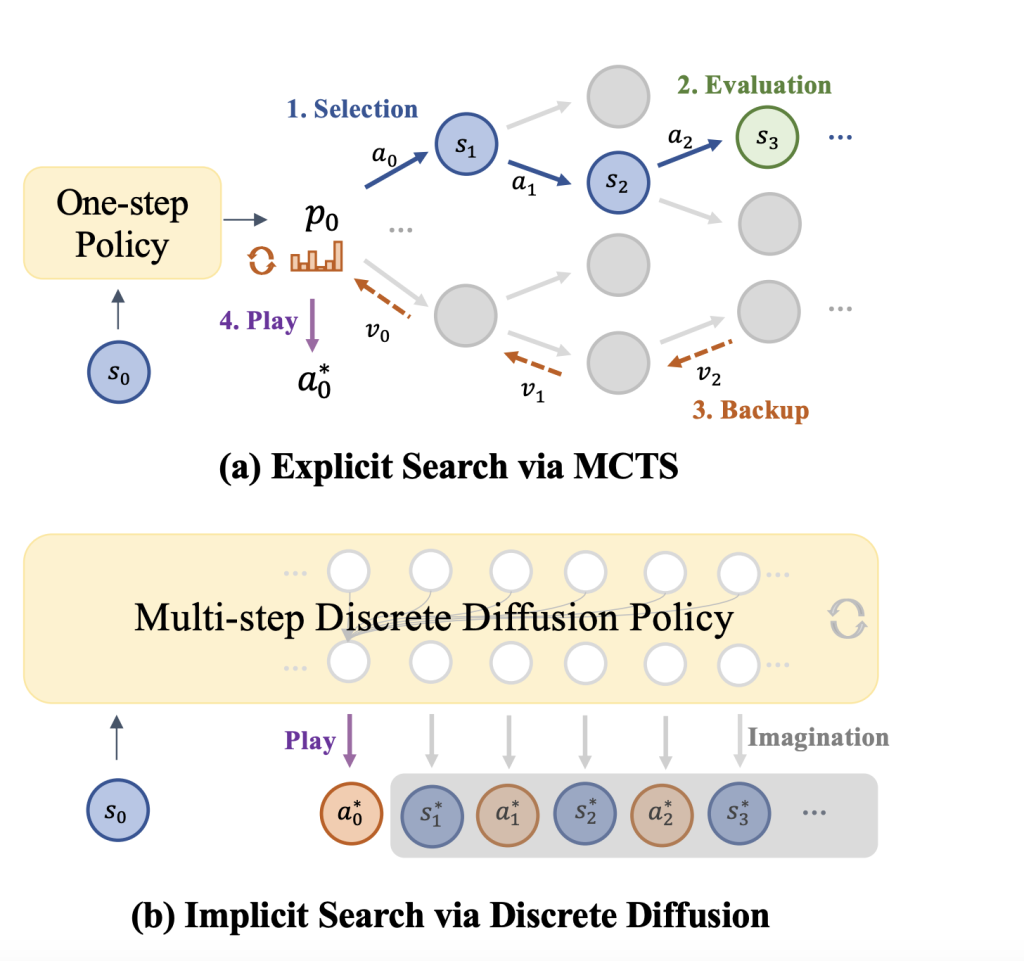

Large language models (LLMs) generate text step by step, which limits their ability to plan for tasks requiring multiple reasoning steps, such as structured writing or problem-solving. This lack of long-term planning affects their coherence and decision-making in complex scenarios. Some approaches evaluate various alternatives before making a choice, which improves prediction precision. However, they have higher computational costs and are prone to errors if future forecasts were incorrect.

Apparent search algorithms like Monte Carlo Tree Search (MCTS) and beam search are well-liked in AI planning and decision-making but lack inherent limitations. They use repeated simulations of the future, with rising computation costs and rendering them unsuitable for real-time systems. They also depend on a value model to estimate every state, which, if incorrect, propagates the error along the search. Since longer predictions create more errors, these errors build up and decrease decision accuracy. This is particularly problematic in complicated tasks necessitating long-term planning, where it becomes challenging to maintain accurate foresight, resulting in inferior outcomes.

To mitigate these issues, researchers from The University of Hong Kong, Shanghai Jiaotong University, Huawei Noah’s Ark Lab, and Shanghai AI Laboratory proposed DIFFUSEARCH. This discrete diffusion-based framework eliminates explicit search algorithms like MCTS. Instead of relying on costly search processes, DIFFUSEARCH trains the policy to directly predict and utilize future representations, refining predictions iteratively using diffusion models. Integrating the world model and policy into a single framework reduces computational overhead while improving efficiency and accuracy in long-term planning.

The framework trains the model using supervised learning, leveraging Stockfish as an oracle to label board states from chess games. Different future representations are examined, with the action-state (s-asa) method selected for simplicity and efficiency. Rather than directly predicting future sequences, the model utilizes discrete diffusion modeling, applying self-attention and iterative denoising to improve action predictions gradually. DIFFUSEARCH avoids costly marginalization over future states during inference by directly sampling from the trained model. An easy-first decoding strategy prioritizes more predictable tokens for denoising, enhancing accuracy.

Researchers evaluated DIFFUSEARCH against three transformer-based baselines: State-Action (S-A), State-Value (S-V), and Action-Value (SA-V) models trained using behavioral cloning, value-based decision-making, and legal action comparison, respectively. Using a dataset of 100k chess games, with states encoded in FEN format and actions in UCI notation, they implemented GPT-2-based models with an Adam optimizer, a 3e-4 learning rate, a batch size of 1024, an 8-layer architecture (7M parameters), a horizon of 4, and diffusion timesteps set to 20. Evaluations included action accuracy, puzzle accuracy, and Elo ratings from a 6000-game internal tournament. DIFFUSEARCH outperformed S-A by 653 Elo and 19% in action accuracy and exceeded SA-V despite using 20 times fewer data records. Discrete diffusion with linear λt achieved the highest accuracy (41.31%), surpassing autoregressive and Gaussian methods. DIFFUSEARCH retained predictive ability in future moves, though accuracy declined over steps, and performance improved with more attention layers and refined decoding. Positioned as an implicit search method, it demonstrated competitiveness with explicit MCTS-based approaches.

In summary, the proposed model established that implicit search via discrete diffusion could effectively replace explicit search and improve chess decision-making. The model surpassed searchless and explicit policies and showed its potential to learn future-imitative strategies. Although using an external oracle and a limited data set, the model indicated future possibilities for improvement through self-play and long-context modeling. More generally, this method can be applied to improve next-token prediction in language models. As a starting point for further investigation, it forms a basis for investigating implicit search in AI planning and decision-making.

Check out the Paper, and GitHub Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 80k+ ML SubReddit.

🚨 Recommended Read- LG AI Research Releases NEXUS: An Advanced System Integrating Agent AI System and Data Compliance Standards to Address Legal Concerns in AI Datasets

Divyesh is a consulting intern at Marktechpost. He is pursuing a BTech in Agricultural and Food Engineering from the Indian Institute of Technology, Kharagpur. He is a Data Science and Machine learning enthusiast who wants to integrate these leading technologies into the agricultural domain and solve challenges.