Nvidia open sources Run:ai Scheduler to foster community collaboration

Following up on previously announced plans, Nvidia said that it has open sourced new elements of the Run:ai platform, including the KAI Scheduler.

The scheduler is a Kubernetes-native GPU scheduling solution, now available under the Apache 2.0 license. Originally developed within the Run:ai platform, KAI Scheduler is now available to the community while also continuing to be packaged and delivered as part of the NVIDIA Run:ai platform.

Nvidia said this initiative underscores Nvidia’s commitment to advancing both open-source and enterprise AI infrastructure, fostering an active and collaborative community, encouraging contributions,feedback, and innovation.

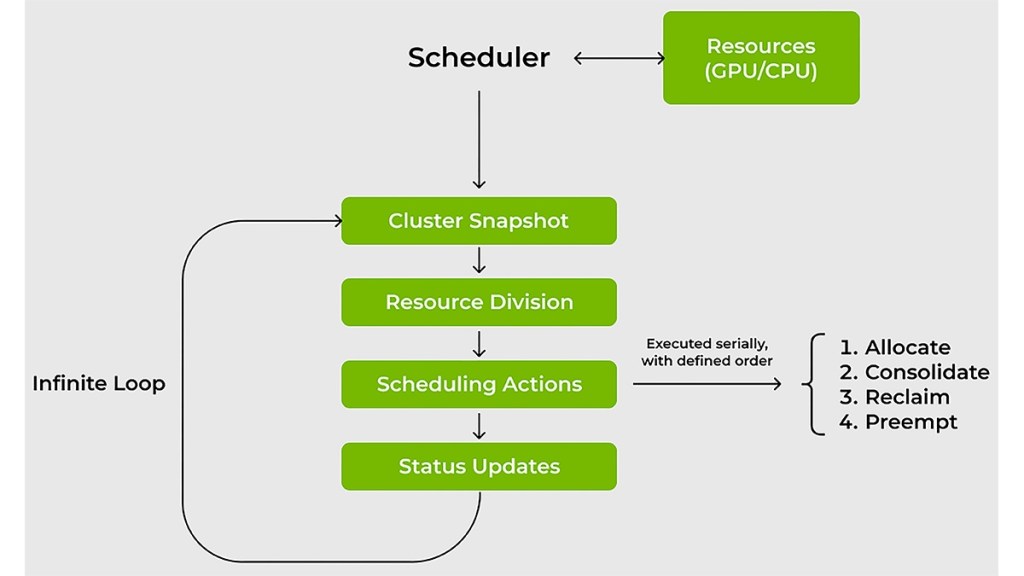

In their post, Nvidia’s Ronen Dar and Ekin Karabulut provided an overview of KAI Scheduler’s technical details, highlight its value for IT and ML teams, and explain the scheduling cycle and actions.

Benefits of KAI Scheduler

Managing AI workloads on GPUs and CPUs presents a number of challenges that traditional resource schedulers often fail to meet. The scheduler was developed to specifically address these issues: Managing fluctuating GPU demands; reduced wait times for compute access; resource guarantees or GPU allocation; and seamlessly connecting AI tools and frameworks.

Managing fluctuating GPU demands

AI workloads can change rapidly. For instance, you might need only one GPU for interactive work (for example, for data exploration) and then suddenly require several GPUs for distributed training or multiple experiments. Traditional schedulers struggle with such variability.

The KAI Scheduler continuously recalculates fair-share values and adjusts quotas and limits in real time, automatically matching the current workload demands. This dynamic approach helps ensure efficient GPU allocation without constant manual intervention from administrators.

Reduced wait times for compute access

For ML engineers, time is of the essence. The scheduler reduces wait times by combining gang scheduling, GPU sharing, and a hierarchical queuing system that enables you to submit batches of jobs and then step away, confident that tasks will launch as soon as resources are available and in alignment of priorities and fairness.

To further optimize resource usage, even in the face of fluctuating demand, the scheduleremploys two effective strategies for both GPU and CPU workloads:

Bin-packing and consolidation: Maximizes compute utilization by combating resourcefragmentation—packing smaller tasks into partially used GPUs and CPUs—and addressingnode fragmentation by reallocating tasks across nodes.

Spreading: Evenly distributes workloads across nodes or GPUs and CPUs to minimize theper-node load and maximize resource availability per workload.

Resource guarantees or GPU allocation

In shared clusters, some researchers secure more GPUs than necessary early in the day to ensure availability throughout. This practice can lead to underutilized resources, even when other teams still have unused quotas.

KAI Scheduler addresses this by enforcing resource guarantees. It ensures that AI practitioner teams receive their allocated GPUs, while also dynamically reallocating idle resources to other workloads. This approach prevents resource hogging and promotes overall cluster efficiency.

Connecting AI workloads with various AI frameworks can be daunting. Traditionally, teams face a maze of manual configurations to tie together workloads with tools like Kubeflow, Ray, Argo, and the Training Operator. This complexity delays prototyping.

KAI Scheduler addresses this by featuring a built-in podgrouper that automatically detects and connects with these tools and frameworks—reducing configuration complexity and accelerating development.